This article could also be titled “Read the manual!”

Jetro asked me if it is possible to use the Powered Up smart hub with two train motors in a way that motors work both at same time but in opposite directions. The idea is assembling using a train with one engine wagons at each end like this:

So when the train is moving both motors can be used with just one control.

It is possible with medium motors (my Puppy robot does that when I use the joystick of the App just in a particular axle) and I checked with the only Powered Up train motor I have that it also works (medium motors and train motors seem to have same ID or no ID at all).

So I told Jetro it should be easy to rebuild my App for that specific purpose.

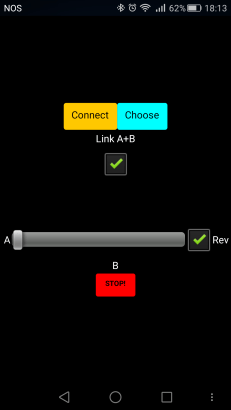

And it really was! Just remove the joystick-related blocks and add two sliders and a few check buttons. So at start only one slider is available and both motors (A and B) run at same speed in opposite directions:

A checkbox allows reversing the direction and the STOP! button quickly stops both motors.

If we want to control the two motors independently another slider appears:

Great, it worked with my Puppy so I asked Jetro for his BT address so I could add to my list of LEGO smart hubs.

But that’s silly!

Yes, I am lazy. But that’s really silly. There must be a way to scan for nearby BLE devices and choose the one we want to use.

So I read the documentation for the AI2 BluetoothLE extension. And they wrote several How Tos including a “Bluetooth Low Energy Basic Connection” that says “Make a basic app that scans for Bluetooth devices and allows you to connect to a selected device”. It also says is for micro:bit and Arduino… but I’m a sceptical old dog.

I didn’t quite copied the example… as I already were using a List Picker button I adapted the example to it.

So these are hte blocks that allow us to use the ‘Choose’ to select which device we want to control:

When the app starts it also starts scanning for nearby BLE devices. The first block above uses “when DeviceFound” to create a list with all devices found. This is list keeps being refreshed while the Scanning process is running.

The list contains the BT Address of each device, it’s friendly name and the RSSI at the time of discovery (a kind of measure of the signal strength that we can use to decide if it is near or far from us).

So when we pick a device from the list (second block above) the text property of the button changes from “Choose” to the friendly name of the device chosen. This name is returned by the “call FoundDeviceName” (not in the original HowTo, that’s why reading documentation is important).

When we press the Connect (or Disconnect) button we now use a slightly different method: “call Connect Index” instead of “call ConnectWithAddress”. I also took the chance to stop the scanning process or restart it (previously the scanning was always running).

So now my App can be used by any one. I feel I’m almost a programmer by now 😀

This is how it works with Puppy:

now I just need to get a second Powered Up train motor to test it with a real LEGO train.

A final note: today at office I found a lots of bluetooth devices nearby. I’m not sure that all are BLE but that’s what the App adds to the list. So I should add a way to filter this list for LEGO-only devices. That means devices whose BT address starts with “”00:16:53” (all my BOOSTs smart hubs) or “90:84:28” (my Powered Up “HUB NO.4” smart hub)… but if LEGO sells lots of these I will probably have to add a few other headers.

EDIT: found a bug when using the APK I built – nothing appears in the listpicker. The BLE extension is lacking proper permissions, you can try the workaround suggested or go to your Android setting and in the App permissions “Your Location” add the App.